The folks over at Stability.ai released their text-to-image model into beta testing yesterday.

Overall, I am extremely impressed! I look forward to following along and participating in the great community they're building over there.

Compared to DALLE-2 and Midjourney, the two most advanced competing models, I found the following most impressive:

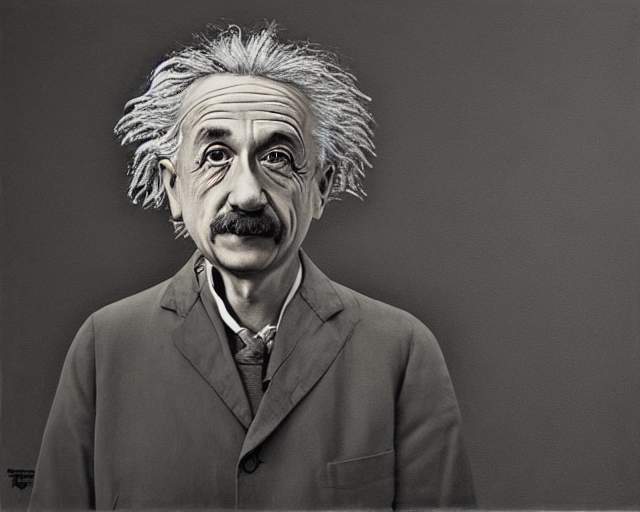

- The ability to generate realistic faces, including those of public figures. OpenAI/DALLE-2 don't allow this for PR/bias reasons. StableDiffusion definitely surpasses MJ in this regard as well, both in terms of photorealism and the relative abscence of uncanny image artifacts.

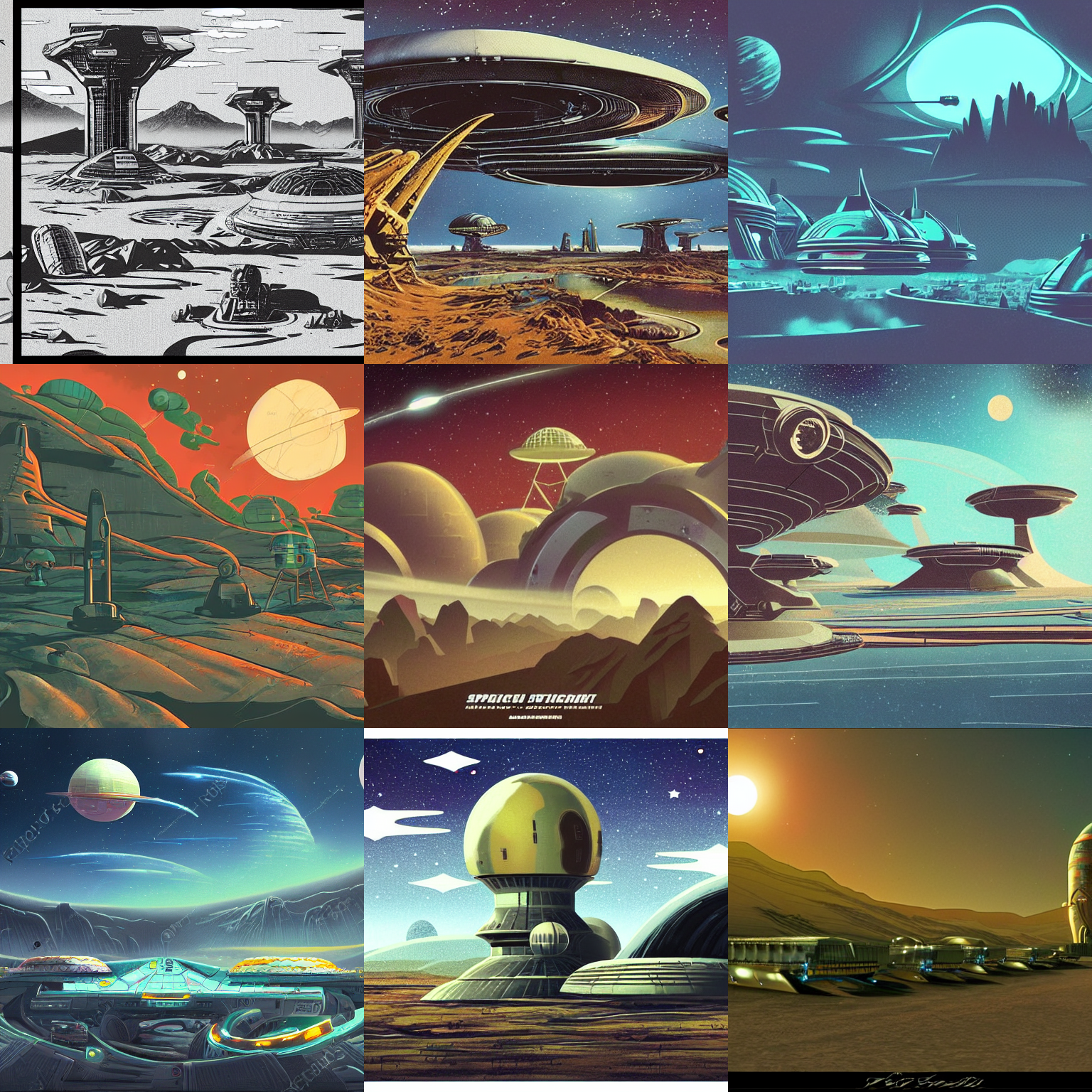

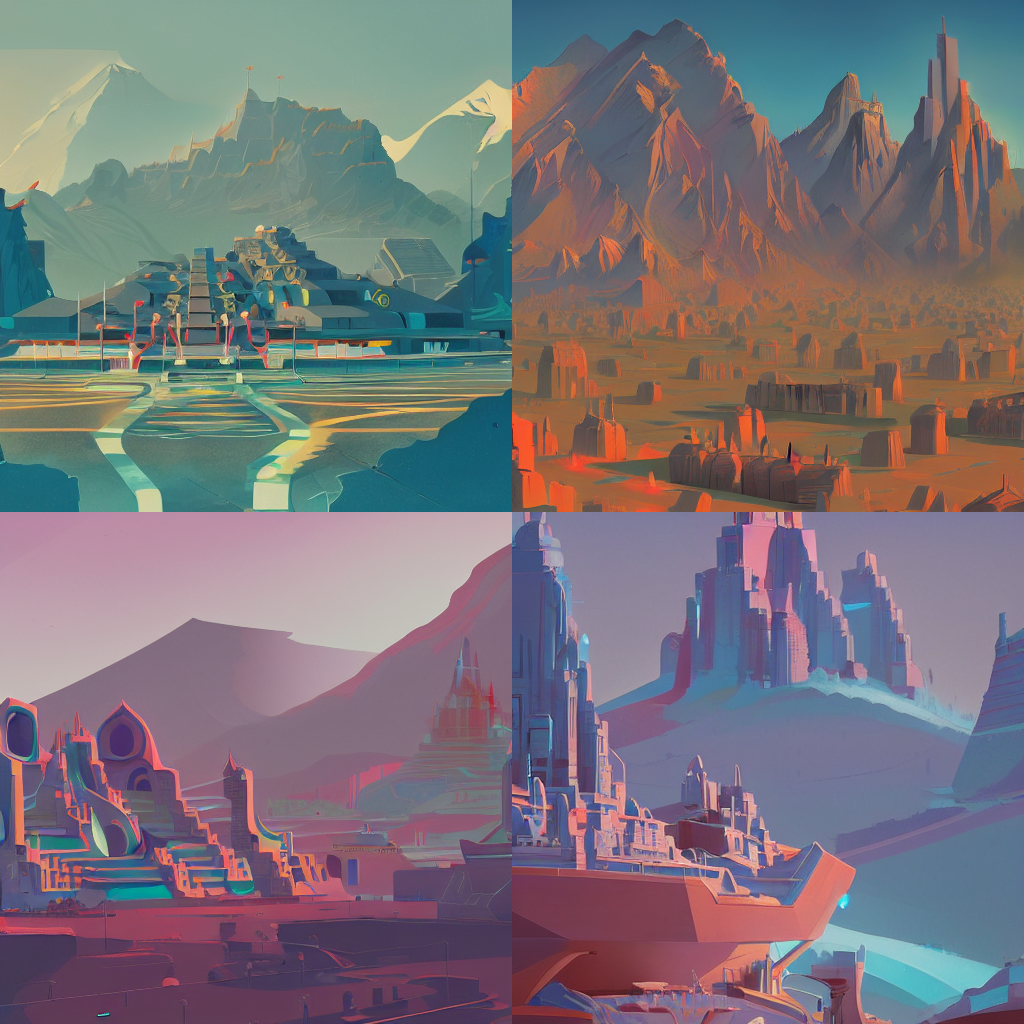

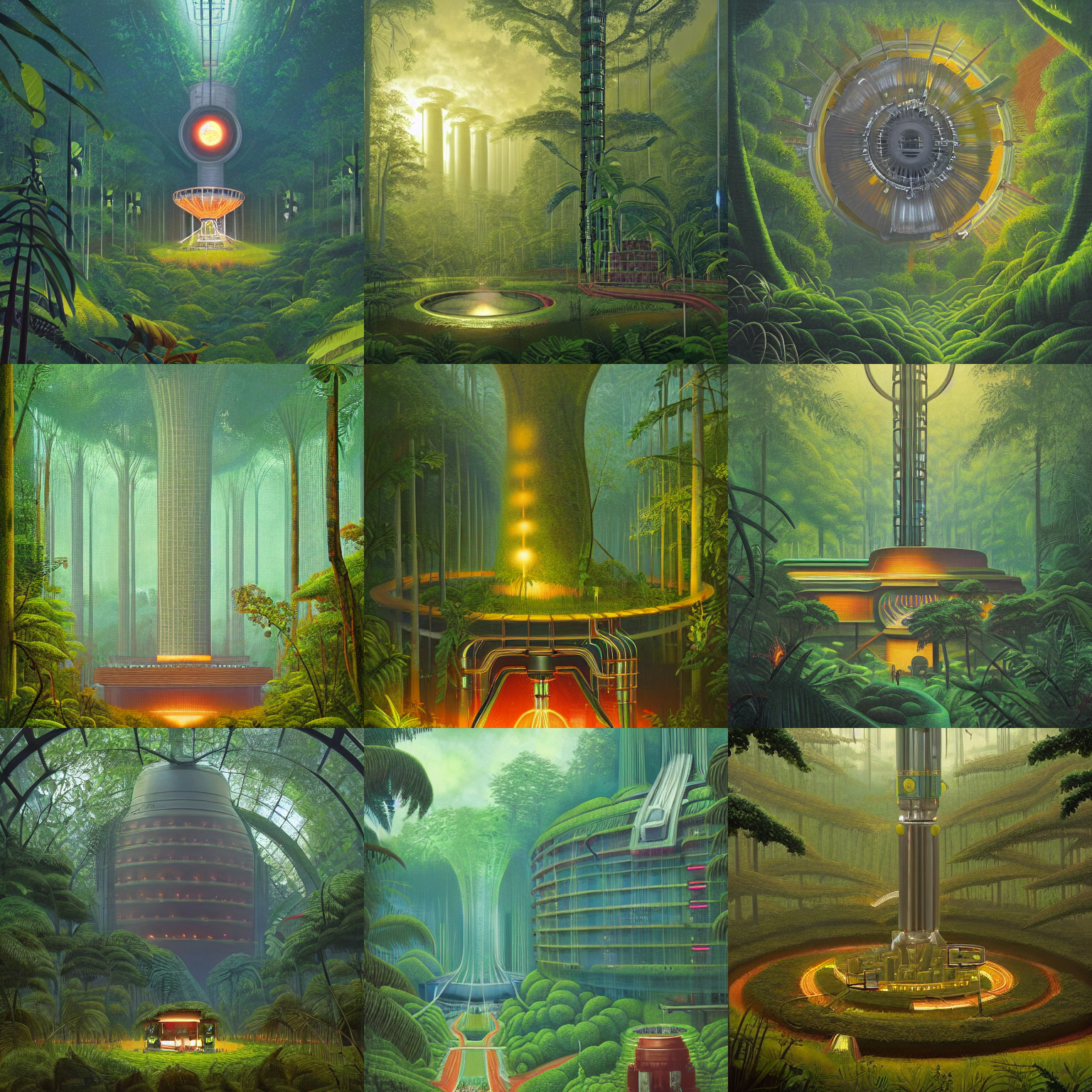

- Higher fidelity rendering for most artistic stylings, and clearly the strongest on several genres of digital art. Additionally, I was able to find two or three lesser-known artists which don't appear to be present in the DALLE-2 or Midjourney training data.

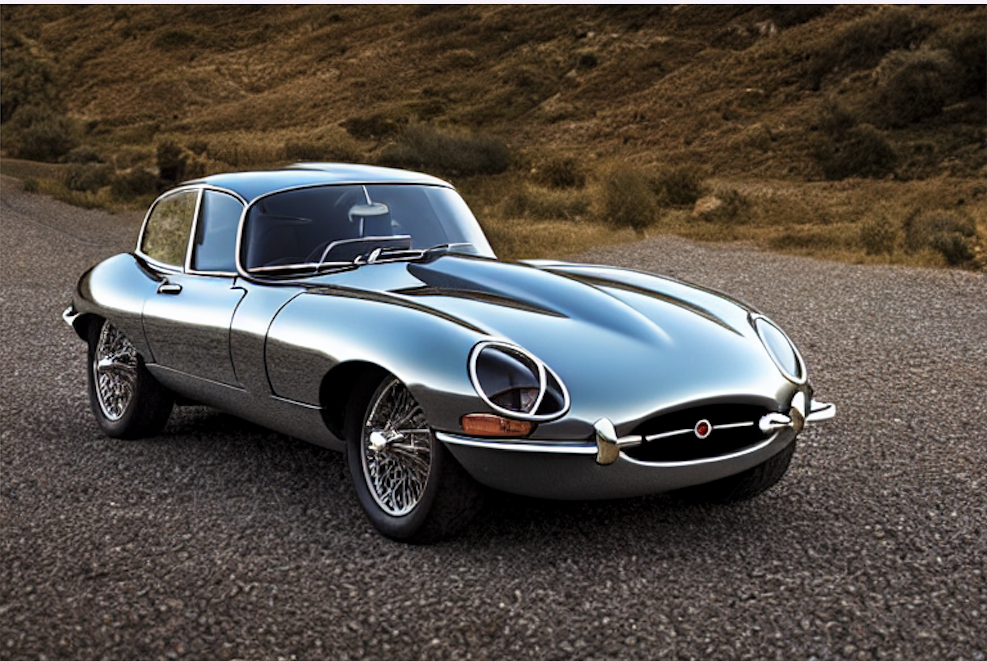

- The ability to anchor on a stable seed and iterate on the prompt. Here, the prompt is slightly tweaked around the Mustang's model year while all else remains constant (this is the one image on the page not generated by me):

However, I experienced a few areas where the model will need some further refinement compared to DALLE-2 and Midjourney:

- Larger resolution images often show the subject twice or more / lose coherence.

- Images attempting to blend two or more dissimilar styles tend to reflect only one dominant theme, especially when the subject is abstract/impossible. For example, "a pair of tennis shoes made of Swiss cheese" fails to reflect the cheese concept even with substantial tweaking.

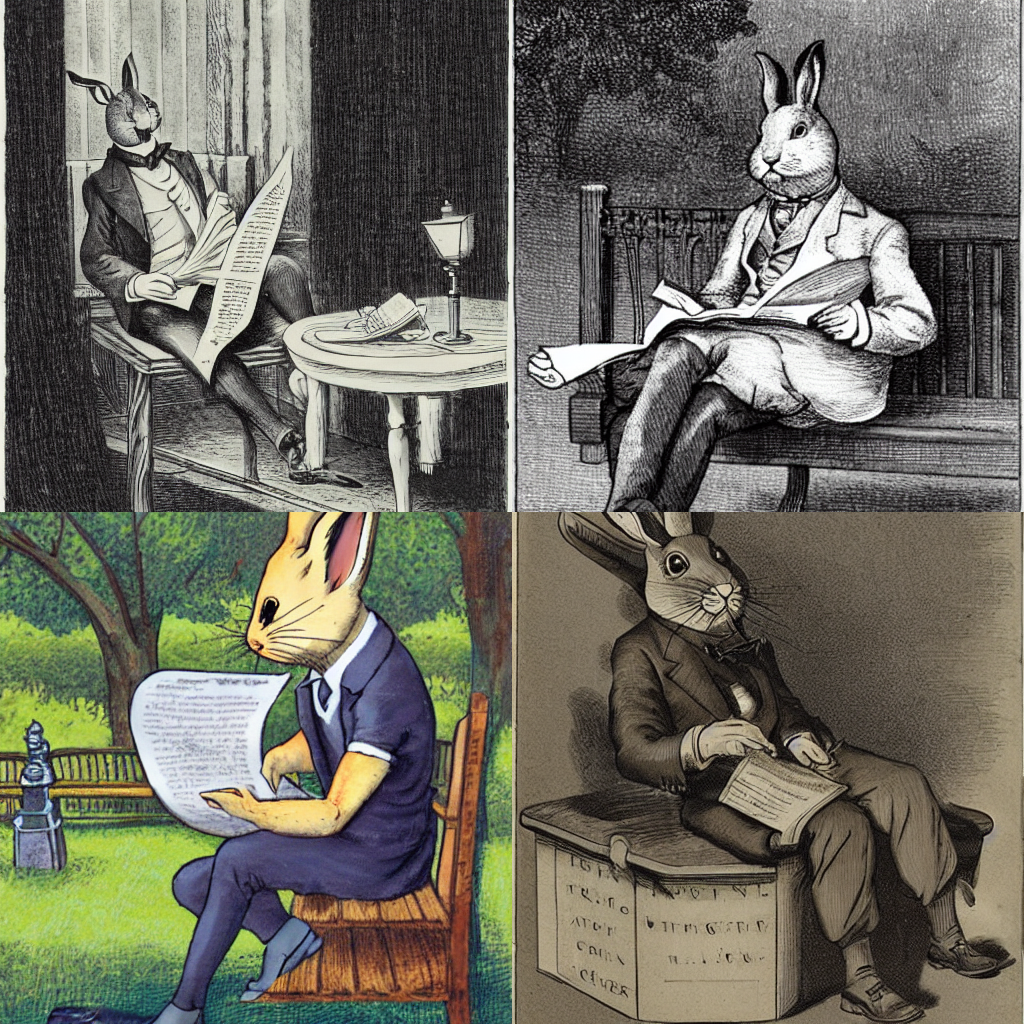

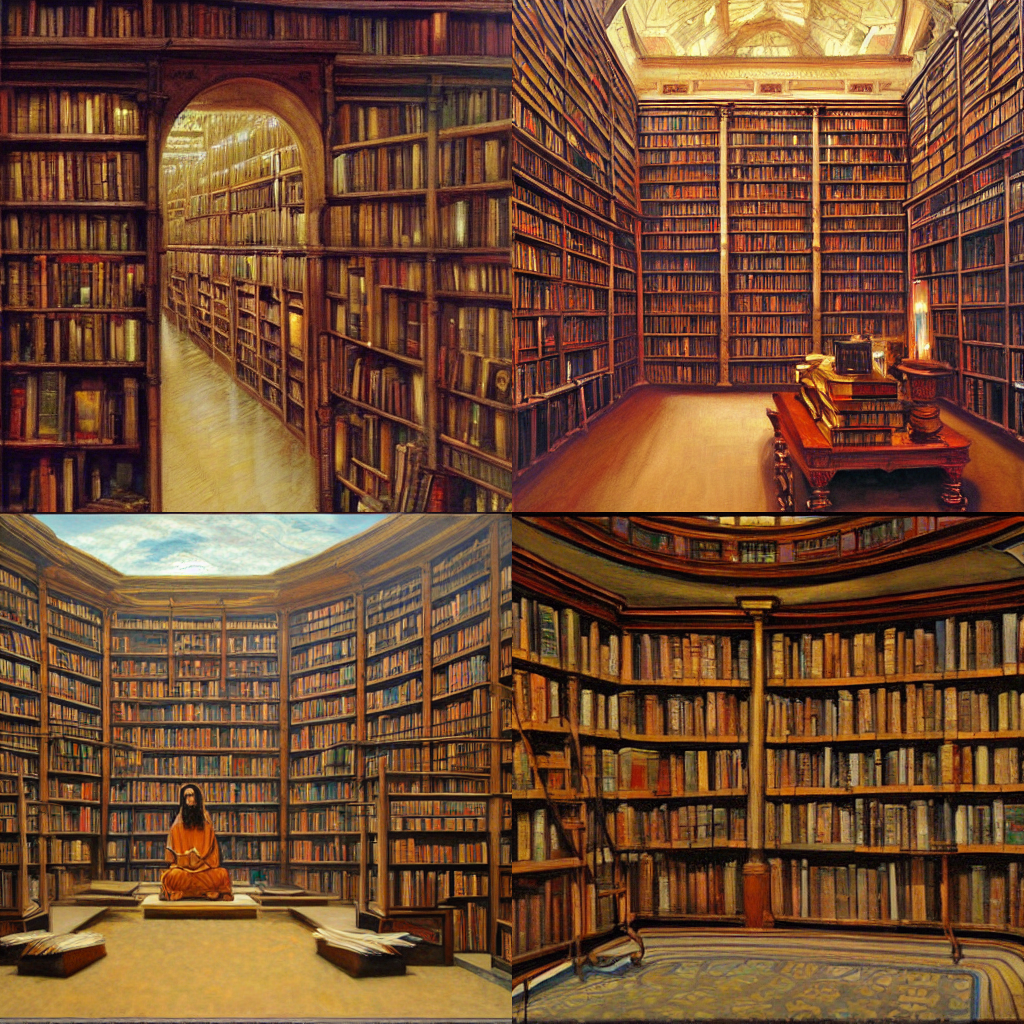

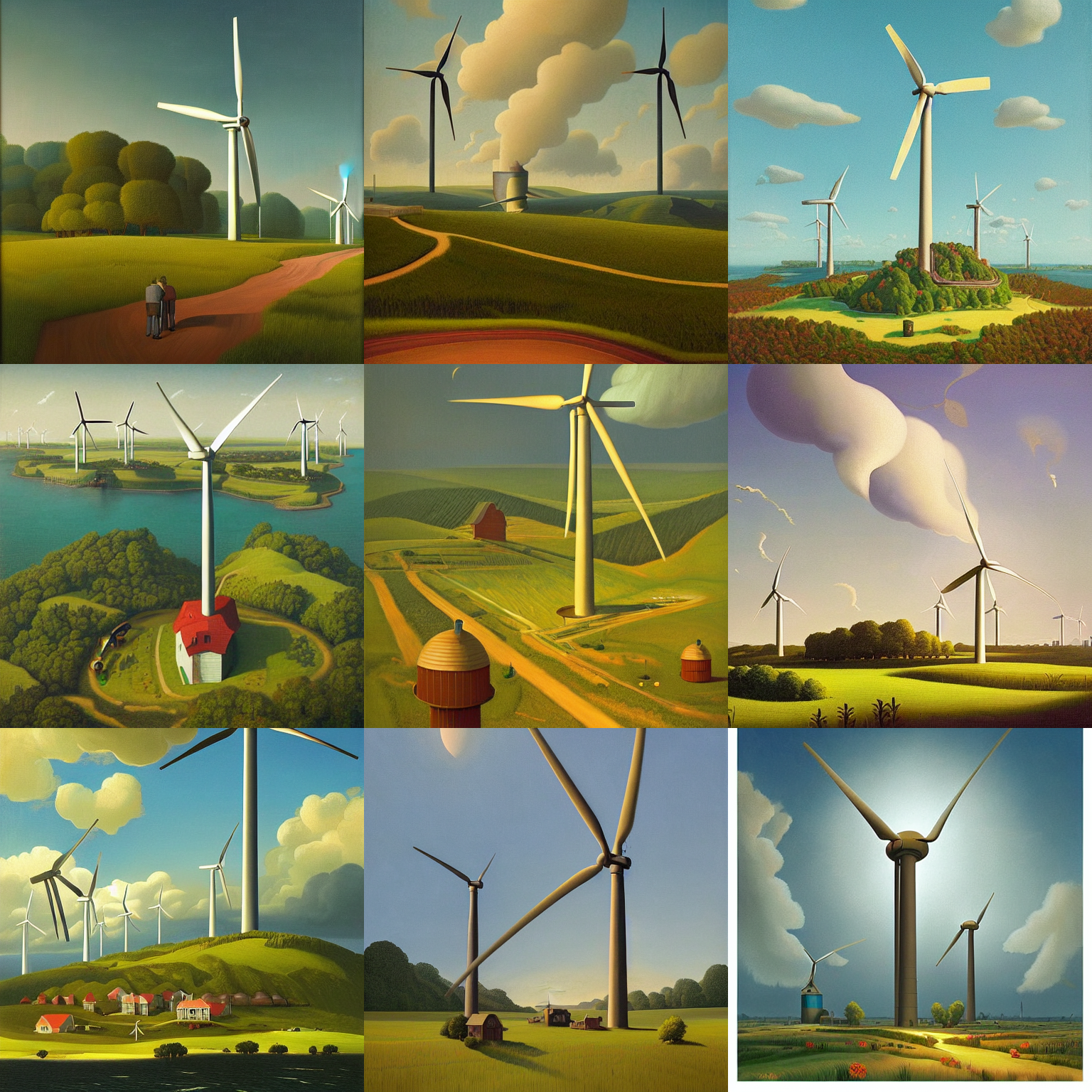

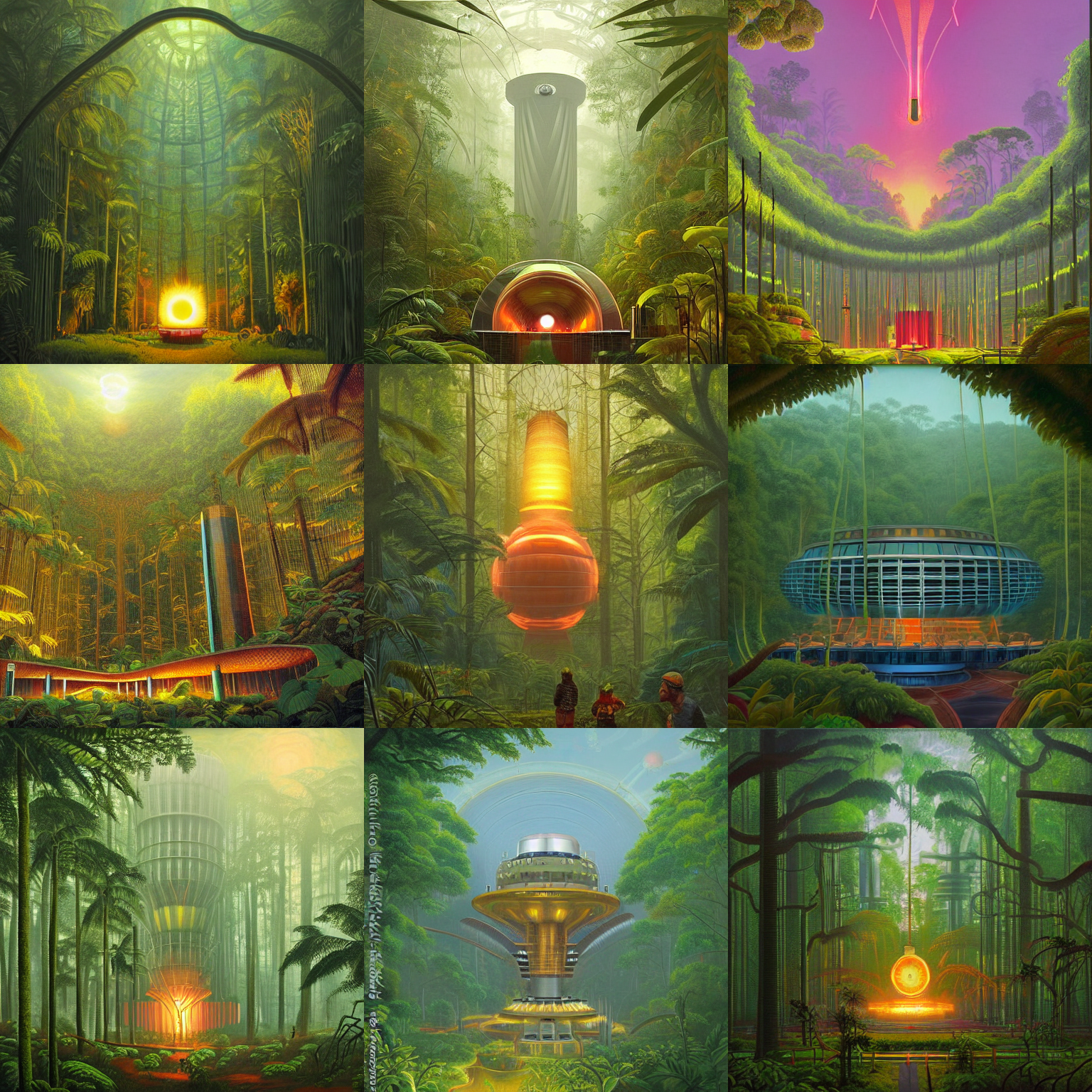

Here are a sample of images I generated in the first 24 hours: